Search results for tag #backup

Taler-Wallet sichern mit Seedvault

Taler sind wie Bargeld. Wer seine Taler nicht sichert, verliert unter Umständen alles. Dieser Artikel zeigt, wie man die Taler-App mit Seedvault sichert. Doch Seedvault dient auch dazu, ein Custom-ROM Android komplett zu sichern.

I swear, if I didn't make mistakes, I'd make nothing at all.

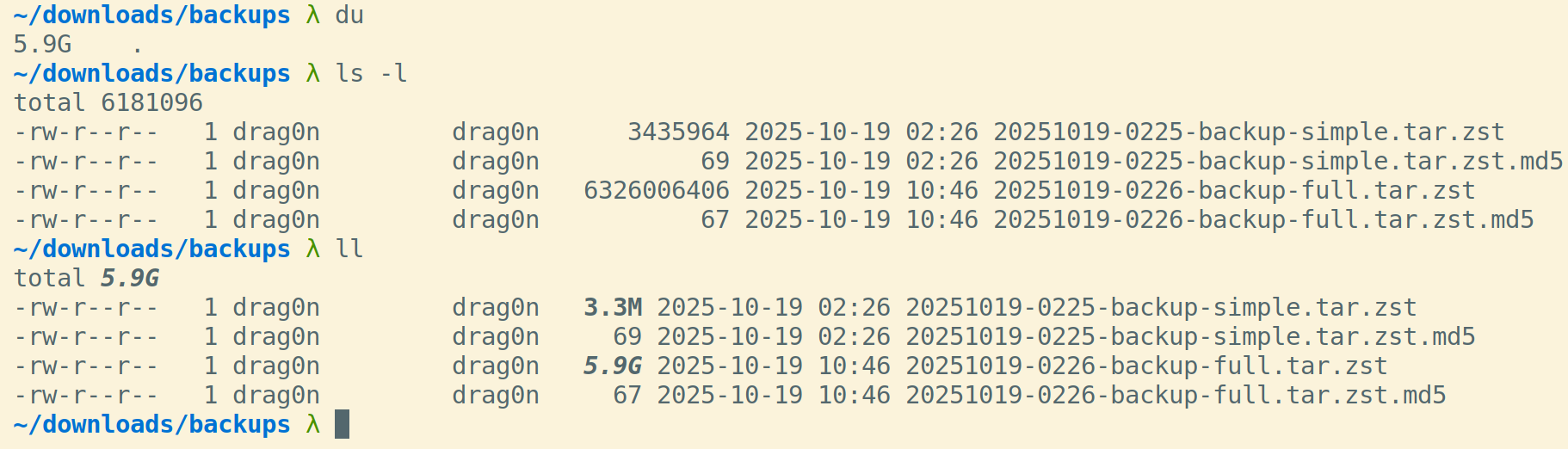

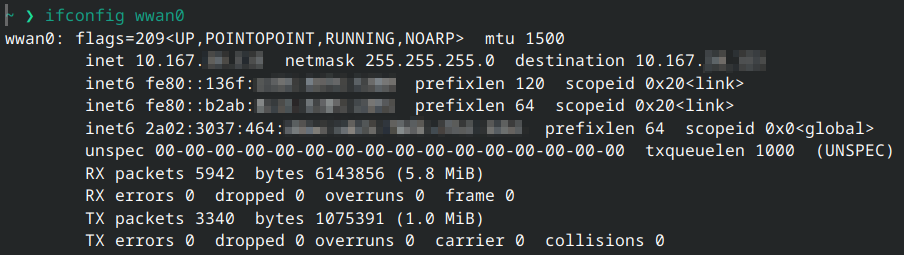

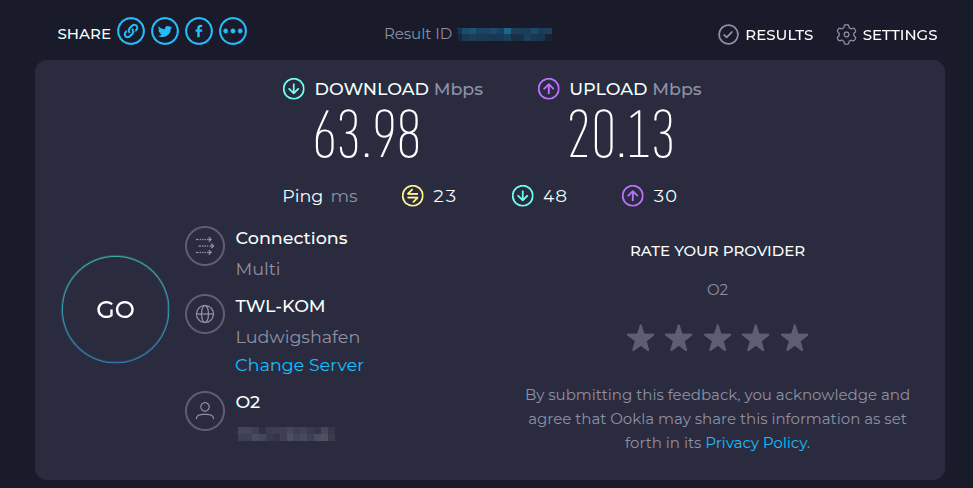

This time, I somehow managed to delete all the data from my Nextcloud folder on my laptop AND on my Nextcloud server. Good thing I have backups. The latest was just last night. It'll take a while, but at least I can recover.

Keep proper backups, kids!

Ich mag die Backup Lösung von Veeam sehr. Vor allem unter Linux kann man damit leicht Image-Basierte Backups machen selbst mit ext4. Leider ist das Scheduling gerade unter Linux nicht gut. Deshalb habe ich eine Lösung mit SystemD gebaut. Ich habe einen Blogeintrag geschrieben der beschreibt wie das ging. Am ende vom Betrag findet ihr auch direkt einen Link zum Code.

https://cperrin.xyz/2026/01/28/veeam-backups-unter-linux-mit-systemd-triggern/

Time Machine inside a FreeBSD jail

Learn how to back up your Mac by setting up a Time Machine instance inside a FreeBSD jail using BastilleBSD and Samba. A quick and "painless" way to leverage ZFS snapshots for your macOS backups.

https://it-notes.dragas.net/2026/01/28/time-machine-freebsd-jail/

#ITNotes #FreeBSD #Apple #Backup #Data #OwnYourData #Server #TimeMachine #Tutorial #zfs #BastilleBSD #Bastille #Samba

Yeah ! #next #s3 #backup je vais mettre ça en back-end externe chiffré de mon #nextcloud

Merci @nextinpact

Neuer Blog-Artikel:

Hier mein ausführlicher Artikel zu dem sehr guten Backup-Programm KopiaUI:

https://digital-cleaning.de/index.php/kopiaui-backup-fuer-fortgeschrittene-win-macos-linux/

(Kurzer Boost wäre sehr nett, weil der Artikel etliches an Zeit gekostet hat - muss aber nicht sein :-) ).

Bon, et après avoir testé rapidement les deux offres de #sauvegarde #borbackup « grand public » les moins chères du marché que j’ai trouvées, je dirais pour faire court :

- #Hetzner : moins cher/To, permet une meilleure granularité de configuration.

- #Borgbase : moins cher/To, mais : installation et configuration bien plus faciles et rapides, avec des petites fonctions cool, telles que les alertes mail en cas d’absence de sauvegarde automatiques pendant un temps choisi.

@schenklklopfer @herrLorenz allein #Windows ist nen Abstrich.

- Zu erwarten dass #Linux einfach 1:1 drop-in macht ist wie zu erwarten dass nen #Elektromotor anstelle eines #Diesel einfach in nen Auto verbaubar ist und das ohne #TÜV-Abnahme straßenlegal wird!

Für die allermeisten "#Normies" die den #PC eh nur als #Surfmaschine mit #Browser, #eMail, #Office & #Multimedia-Wiedergabe nutzen klappt das problemlos:

-Echtes #Backup machen, #UbuntuLTS drüber, Daten rüberkopieren & fertig!

Nur ist das Problem nicht Linux, sondern Hersteller beschissener #Hardware & #Software welche sich verweigern oder konsequent gegen die #Nutzer*innen arbeiten!

- Siehe #Adobe- & #MicrosoftOffice welche undokumentierte Funktionen in #Windows nutzen um aktiv #Wine & Co. zu detektieren und die Funktion einzustellen!

Hier wären #Regulierer wie @BMDS & @EUCommission an der Reihe, solche Machenschaften zu kriminalisieren und #Interoperabilität und #Portabilität vorzuschreiben!

#UnbequemeWahrheit #Enshittification #Unix #Windows10 #Windows11 #EndOf10

Et vous, vos bonnes résolutions 2026 ? Vous les avez sauvegardées déjà ?

J'ai (enfin) sorti mon billet sur ma mise en place de back-up avec Borg, Borgmatic et BorgBase pour mon NAS et mon serveur chez OVH.

Ça date de quand votre dernière sauvegarde ? De l'année dernière ? Et vous l'avez testée ?

Si ça peut être utile à certaines et certains d'entre vous, ça sera un bon début d'année.

Petit bilan #backup : ma politique de sauvegardes n'est plus tout à fait au point. 🤔

Actuellement, j'ai :

- un backup incrémental quotidien et chiffré de mon laptop à la maison, avec #DejaDup, sur un petit disque dur rotatif externe.

- mes documents importants (mais non critiques) sont synchronisés sur mon serveur

- ces mêmes documents sont resynchronisés sur un autre portable « de secours » et sur le PC de HuitAns.

C'est déjà bien mieux que la plupart des gens, je sais.

Je suis en train de faire des tests assez intensifs de #SeedVault . Vous avez, le bidule intégré aux OS custom #Android pour faire un #backup de l'#ordiphone

Est-ce qu'un rapport vous intéresse ?

Si oui, en pouets, ou en article de blog ?

Quels informations souhaiteriez vous voir ?

Le repouet lave les poils qui sont sur la tête.

RE: https://mastodon.social/@Migueldeicaza/115713071946346627

Please, please, please don't rely on big tech to keep your data safe. They will carelessly cut you and laugh while you're bleeding.

#backup #dataSovereignty #Apple #bigTech #selfHosting

Suppose a chap had two times 240gb SSD in his server, configured under Linux LVM to work as one larger drive of 480gb... suppose he wanted to back 'the lot' up to a much larger single (USB external) drive so that in the event of a serious system failure all would not be lost... if the server had a single drive then he'd just take a normal disk image but is that viable with the two drives configured as one? #linux #backup

CW Blogpost Restic [SENSITIVE CONTENT]

A late-stage intro to backups with restic:

Some keep talking about restic being the best personal backup system around, while I had to learn that for others, even experienced Linux and MacOS users, it’s an absolute mystery.

Here’s a usage scenario I suggest for restic beginners today.

https://binblog.de/2025/12/10/a-late-stage-intro-to-backups-with-restic/

I know I've said this before but I think I've finally found the sweet spot for #AV1 encoding for preserving my media. This setting seems to preserve film grain and everything. I essentially turned it up to preset 3, crf 20 and replaced all the film grain synthesis options and such I've been trying with just variance boost, since that's what's in the default "Super HQ AV1" profile that comes with Handbrake.

I filmed with my phone to hopefully make sure y'all can see what I'm seeing.

Yo Fedi!

Is there anyone here who uses Backblaze with Linux? Do you use the CLI client and scripts, or a third-party tool from this list at the link? Infodump on me please.

https://www.backblaze.com/cloud-storage/integrations?filter=backup

La magie du backup continue ! ✨ Proxmox Backup Server 4.1 apporte encore plus de performance et de stabilité à vos sauvegardes.

Mettez à jour et dormez sur vos deux oreilles ! 😴

Lien : https://www.proxmox.com/en/about/company-details/press-releases/proxmox-backup-server-4-1

#Proxmox #Backup #ITInfrastructure #Sysadmin #DataProtection #PBS4

Ich habe einen Restic-Backup-Konfigurator gebaut – die Einstellungen werden im Browser gespeichert.

Ihr könnt dort alles konfigurieren und braucht dann nur noch den fertigen Befehl zu kopieren.

Ist aber noch nicht ganz fertig; war ursprünglich hauptsächlich für mich privat gedacht, weil ich keine Lust hatte, die Befehle ständig raussuchen zu müssen.

Einfach mal ausprobieren:

New blog post!

It is about how this Mastodon instance is backed up daily without downtime =)

https://www.yevi.org/blog/daily-matrix-and-mastodon-backups-with-zero-downtime

#Mastodon #Matrix #SelfHosted #SelfHosting #SelfHost #HomeLab #Ansible #Proxmox #Backup #PBS #ProxmoxVE #Blog #GlitchySocial

There is a rule that you should regularly test restoring your #backups.

Do you have any processes/recommendations to do this in an automated(!) way? For more machines? Ideally w/ @restic?

What does "restore works" mean for you? Backup repository integrity checks? Successfully extracting a certain subset of files? Extracting all files (which takes quite some time/disk space)? Comparing checksums/contents of certain files? (But what about newer versions on the backup source?)

📰 Aujourd'hui j'ai publié un article sur comment automatiser la sauvegarde de ses mots de passe LockSelf/LockPass via un petit outil en ligne de commande que j'ai développé la semaine dernière :

➡️ https://www.wanadevdigital.fr/355-lockpass-automatiser-la-sauvegarde-des-mots-de-passe/

Bonne lecture ! 😁

I recently discover the usage of upgrade.site (https://man.openbsd.org/install.site.5) and I decided to implement an auto-upgrade process for my two VMs hosted at @OpenBSDAms

But, since I need to ensure it works, I'm testing borgmatic as a backup solution :) #openbsd #doyourbackups #backup

installation de timeshift sur le pc (juste pour le système, 56go quand même, faut que je vois pour optimiser/alléger ça)

installation d'une vm pour centraliser les données personnelles avec #borgbackup2.

un peu de galère sur des sujets connexes (réseaux et disques) maisune fois la doc borg2 sous la main ca c'edt plutôt bien passé.

maintenant il faut que je reflechisse à ranger un peu mieux mes données pour facilité les sauvegardes

...

et que je documente un peu.

Je ne veux pas laisser mon serveur en mode "presque à jour".

Le guide complet pour installer et configurer l'update automatique de votre PBS, sans crash surprise : ➡️ https://wiki.blablalinux.be/fr/update-pbs-script-cron

Une solution de stockage à froid économique et très efficace énergétiquement. https://retzo.net/wakeonstorage/ #stockage #backup #écologie #raspberrypi #exemple

My backup is down.

How timely, I have just been trying to backup priceless footage shot for a music video for a song where "my backup is down" is spelled out verbatim.

While I wait for a reply from Btrfs mailing list, I am tempted to buy a bigger drive. Like a 20 TB one.

But then I'd really need two to have redundancy, and that would be a tad bit crazy...

If you missed my "announcement" about the music video, here it is:

https://mastodon.social/@unfa/115412018567684691

#Btrfs #SysAdmin #Backup #Music

"Untested backups are just expensive hopes and dreams."

Did some proper restore-tests of my offsite backups and restored them one after another into a local virtual machine (KVM) and verified that they decrypt, restore and boot correctly 🙂 (including our Mastodon instance burningboard.net)

It's good to have backups, but it's an even better feeling when you know, they work, restore correctly and the procedure has been tested.

All check marks green, next test in January 2026 🙂

#linux #freebsd #backup #restore #devops #it #mastoadmin @tux

[

[ , fedora

, fedora  etc (non pas arch

etc (non pas arch  ) proposent pas de mettre en place immédiatement la sauvegarde distante vers le serveur de sauvegarde ?

) proposent pas de mettre en place immédiatement la sauvegarde distante vers le serveur de sauvegarde ?

[

[

![[?]](https://social.dk-libre.fr/social/oldsysops/s/7e93fa6bf16b47da4f57ddf9072aff8c.png)

[

[